Kremlin-Linked Troll Farm Spreads Fake News About Kamala Harris

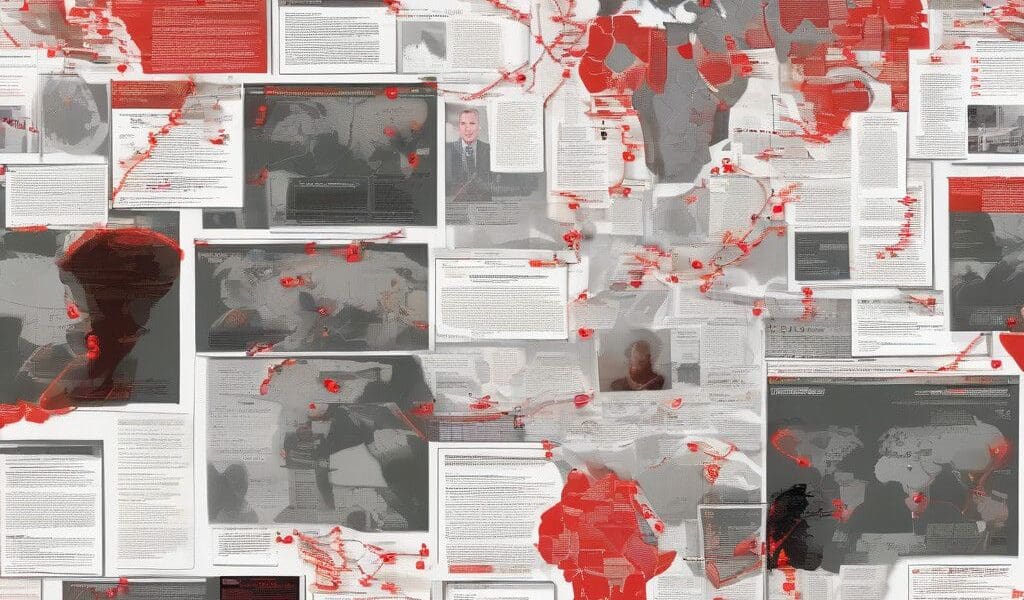

In the realm of digital marketing, few factors influence public opinion as powerfully as the spread of misinformation. Recent investigations by Microsoft have revealed a disconcerting operation linked to the Kremlin, aimed at tarnishing the reputation of Kamala Harris, a leading Democratic candidate in the upcoming U.S. elections. This report sheds light on the methodologies employed by organized troll farms and emphasizes the pressing need for robust digital marketing and information campaigns to counter these threats.

The operation, attributed to a group known as Storm-1516, focused its efforts on disinforming the public about Harris. At the center of this campaign was a fabricated narrative accusing her of causing a hit-and-run incident that left a 13-year-old girl paralyzed. This narrative, while entirely fictional, was disseminated via fake news outlets, including a site purporting to be ‘KBSF-TV.’ Such tactics demonstrate a sophisticated understanding of social media dynamics, suggesting that the trolls carefully crafted content to go viral.

The implications of these actions extend beyond mere political assassination. Fake news campaigns contribute to a chilling climate where voters may become disengaged, distrusting legitimate information. According to a study conducted by the Pew Research Center, nearly 70% of Americans feel overwhelmed by the amount of misinformation they encounter online. This environment of confusion provides fertile ground for manipulative political strategies, as seen with the Russian interference tactics.

In an age where social media is a primary information source, the channels chosen for dissemination prove significant. The false claim against Harris gained traction primarily on platforms like X.com (formerly Twitter). This is particularly concerning, as social media algorithms often prioritize content that evokes strong reactions, whether supportive or dissenting. Pro-Russian figures, such as the controversial influencer Aussie Cossack, amplified these fake narratives, illustrating how influential individuals can shape discourse in a way that sidesteps traditional media vetting.

The infrastructure behind these troll farms reveals a concerted effort to simulate a genuine news cycle. By incorporating actors who impersonate journalists or whistleblowers, Storm-1516 created content that appears credible at first glance. This method not only misleads viewers but also fosters an environment where conspiracy theories thrive. The Microsoft investigation reveals that these fabricated videos were not just random posts but were part of a larger scheme to influence political sentiment and voter behavior as the elections approached.

Moreover, U.S. authorities, including the Justice Department, have indicated that this is not an isolated incident. Charges have been levied against Russian state media employees for money laundering, linked to efforts aimed at influencing the upcoming elections. These actions reflect a broader strategy to exploit existing political divisions, bolstering support for anti-democratic movements and undermining public trust in institutions.

The ramifications of this misinformation campaign highlight the urgent need for improved digital literacy initiatives. Effective digital marketing should include strategies for identifying and countering fake news—an aspect that many consumers currently lack. Collaborations between tech companies, educators, and governmental bodies could help develop educational programs designed to teach users how to discern credible sources from disinformation.

For businesses and political entities alike, there is a critical lesson to learn: the integrity of your message is paramount. This incident serves as a cautionary tale for all organizations about the potential impact of misinformation on brand reputation. Development of robust crisis management communication plans is essential, as is fostering a transparent relationship with the audience through consistent and honest messaging.

As we move closer to a pivotal election, both political and commercial entities must remain vigilant against the backdrop of digital misinformation. Building resilience against such interference requires proactive measures. This includes monitoring social media for misleading narratives, deploying fact-checking resources, and investing in reputation management strategies.

The tale of Kamala Harris and the fabricated hit-and-run story is a stark reminder that the digital landscape is not just a marketing channel; it’s a battlefield for public perception. Digital marketers must step forward, leveraging tools at their disposal to uphold truth and counteract hostility borne out of misinformation. The future of democratic engagement and consumer trust hinges on our ability to adapt and respond to the challenges posed by these deceptive practices.