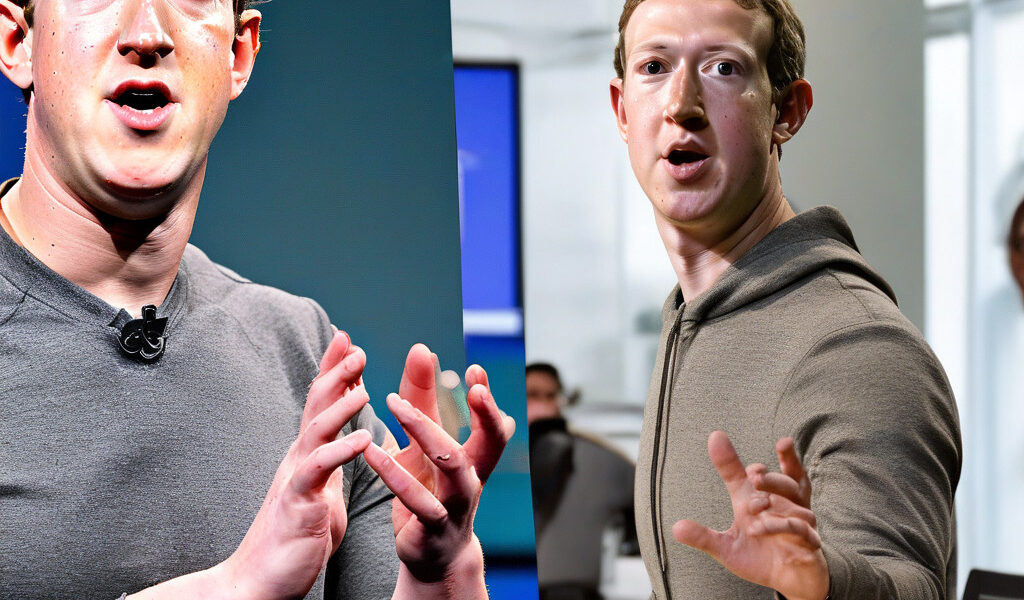

Zuckerberg Provides More Context on Meta’s Moderation Shift

Zuckerberg Provides More Context on Meta’s Moderation Shift

In a recent move that has sparked debates and discussions across the digital sphere, Meta, formerly known as Facebook, announced its decision to do away with fact-checks on the platform. This significant shift in moderation policy has left many wondering about the implications it may have on the spread of misinformation and the overall user experience within the Meta ecosystem.

Mark Zuckerberg, the co-founder, and CEO of Meta, took to social media to provide further context on this decision. According to Zuckerberg, the removal of fact-checks is part of Meta’s broader strategy to empower users to form their conclusions and opinions based on a diverse range of sources and perspectives.

In his statement, Zuckerberg emphasized the importance of promoting free speech while also acknowledging the challenges associated with moderating content in a way that is fair, transparent, and respectful of differing viewpoints. He highlighted that fact-checks, although well-intentioned, could sometimes be perceived as biased or restrictive, leading to concerns around censorship and ideological control.

By eliminating fact-checks, Meta aims to shift towards a more decentralized approach to content moderation, where users are encouraged to critically evaluate information for themselves rather than relying solely on platform-enforced labels or warnings. This move aligns with Meta’s vision of creating a space for open dialogue and exchange of ideas, even if they are controversial or unpopular.

Critics of Meta’s decision have raised valid concerns about the potential consequences of removing fact-checks, especially in combating the spread of misinformation, fake news, and harmful content. Fact-checks have traditionally served as a valuable tool in flagging misleading information and guiding users towards more reliable sources.

However, Zuckerberg reassured users that Meta remains committed to combating misinformation through other means, such as improved AI algorithms, user reporting mechanisms, and partnerships with third-party fact-checking organizations. By leveraging a combination of technology and human oversight, Meta aims to strike a balance between promoting free expression and ensuring a safe and trustworthy online environment.

As Meta navigates this transition in its moderation strategy, the coming months will be crucial in determining how effective this new approach will be in practice. User feedback, data analysis, and ongoing monitoring of content trends will play a vital role in shaping Meta’s evolving content moderation policies.

In conclusion, Zuckerberg’s insights provide valuable context on Meta’s decision to remove fact-checks and offer a glimpse into the platform’s broader vision for content moderation. While the move may signal a significant shift in Meta’s approach, the underlying goal of fostering open dialogue and empowering users to make informed decisions remains at the forefront of this strategic decision.

As Meta continues to adapt to the ever-changing digital landscape, it will be interesting to see how these changes impact the user experience and the broader online community.

#Meta, #Zuckerberg, #FactChecks, #ContentModeration, #DigitalEcosystem